Probably most people would say this is no brainer and actually it is. Of course the type of disks used for your VDI infrastructure matter for the user experience and performance of VDIs. In this article I will do a walk-through of a PoC where we have been required to investigate the impact of the disk performance on a VDI infrastructure.

Before I start with this article I want to ensure that this article is not a scientific based article. We are comparing apple with pears. It is not the purpose of this article to show exact results, but to inform you that it’s wise to do a good research about the disk and the required performance.

Let’s start with the specific use case. I was involved in a Proof of Concept to do see if a group of Software Engineers (programmers) could be using a VDI solution instead of local workstation. One important requirement was that the performance should be equally or better than that local workstation. A small mistake (but with huge impact) was that was forgotten to check what hardware was exactly used in those local workstations. The statement was that a SSD disk was added to systems being used for the daily activities of the end user, you an already guess that the SSD disk statement was too general.

There was already a VDI infrastructure running for other purposes, which was also identified as resource intensive tasks. Those workloads are running on XenServer and a software defined storage solution. To the physical servers have local disks and all those disks in all servers were showing as one Storage Repository (where the data is stored on all disk on all servers). In this current infrastructure regular 10K SAS disks are used and provided the required IOs/performance for those workloads.

For this PoC the servers were equipped with both those regular SAS disks as SSD disks. Wait…. Which SSD disks, for servers (also applies for workstations) there are different types of SSDs available. For the hardware in this project there was Enterprise High Performance and Enterprise Value. You can image that there is a difference in performance and price. As the order was already made we found out that the disks were Enterprise Value. For each type of disks (SAS and SSD) we created a separate volume within the software defined storage solution and offered as a different Storage Repository.

On this infrastructure we build the VDIs, build a custom LoginVSI script (representing the user activities) and run performance test. This phase was primarily used to find out the maximum capacity of the infrastructure. We see more data throughput and higher IO on the SSDs than the SATA disk, but we did not see much differences in the total times of the activities when using a VDI on SATA on SSD. What we saw the processes were pretty CPU intensive.

After those performance test we directly started the pilot with actual users. In that same time frame we also managed to conduct the LoginVSI test on the local workstation. Both the pilot users were mentioning that the activities were taking a much longer time than on the local workstation. That finding was definitely true, our runs with LoginVSI showed exact the same result.

With this finding we focused on determine why the difference was that big. We used on specific run in the LoginVSI script as that did not have any dependencies on network and so on. After testing, troubleshooting and excluding we find out that the disk really mattered (in this case) next to fact of CPU usage. I will leave the CPU out of this article and only go into some more details about the disk.

Actually the conclusion was that we could not reach the lead time of the local workstation and by executing a lots of test and gain more information we found out that in this case the disk really matters.

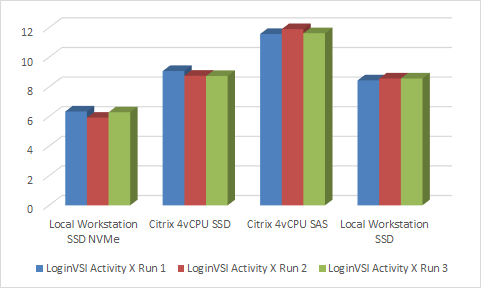

The workstation we were competing with the VDI infrastructure was not using a normal SSD but a NVMe SSD, which is way much better than a regular SSD. The previous type of workstation (which some developers still use) had a regular SSD. When taking that one into account and also executing specific test with the VDI the results as shown below were measured. As you can see the SSD workstation and SSD Storage Repository are showing similar results for this specific task. The workstation with the NVMe disk is much quicker than all others. When a single VDI is used per host SSD is also providing better results than the SAS Storage Repository (when using more VDIs as during the first LoginVSI test the CPU is the most important resource and the difference between the two SRs was almost negligible.

With this outcome we brainstormed what we could to reach the requirement to provide a similar performance. Based on the test we were pretty confident that using NVMe disks in the server would be our only change to reach that goal or using a complete other concept. Fortunate we found a supplier that was able to provide is with some test hardware. We got one server (specs are the same as servers in the PoC) provided with the 6 NVMe disks (maximum possible). With this limited hardware we could not us the software defined solution (a minimum of three servers is required) so we used a local RAID set of the NVMe disk for the tests.

For the other concept we had the option to test with a 4 cartridge Moonshot chassis. On the cartridge we installed the OS (Windows 7 in this case) directly on the cartridge.

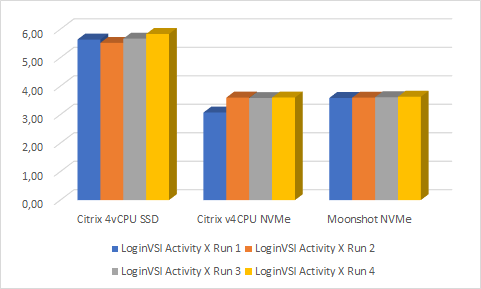

Yes, definitely apples with pears as the characteristics are definitely not the same. As the content used for the activities were changed we run the LoginVSI tests again on the PoC infrastructure to be sure that the results were comparable.

As shown in above image it’s is pretty clear that disk matters. Both the server as the Moonshot solution were providing better results than the regular SSD disk (in a software defined storage solution). It also shows that in our case that the hypervisor does not make a big difference as the NVMe server and the Moonshot have comparable results.

At this phase we showed that the type of disk makes a big difference, but this will also will come with more costs. The NVMe disks are logical more expensive and less disks can be used in server than with the traditional SSDs. Logically this changes the whole business case and ended the PoC for this project.