In this article (series) I will describe and discuss the PVS components you should consider when creating a PVS design. In part 1 I discussed a PVS Farm, Site, Device Collection, View, Store, Database and Load Balancing. In the second part I will continue describe design consideration for PVS components.

Delegation of Control

Citrix Provisioning Services offers four administration roles within the product. One at Farm Level (Farm Administrators), one on site level (Site Administrators) and two on Device Collection level (Device Administrator and Device Operator). Those roles can be configured with Active Directory groups. It’s up to you if and who will get which role. Be careful with providing the Device Operator role, its can work perfectly for VDI infrastructures, however with RDS servers its’ probably not a good idea.

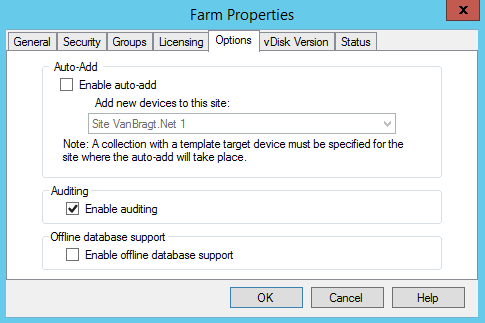

Logging/Auditing

Both on PVS server as Target Device level the amount of logging can be configured. With PVS 6.1 these information is stored in logfiles, using PVS 7.x the logging can be seen using Citrix tools. PVS server logging can also be written to the Windows Event Log. PVS also support auditing (all changes done in the products are recorded). The auditing implementation is done in a great way, so even there are no security rules that require enabling auditing I would also enable it as it also writes down which setting is changed actually including the new and old value.

Scalability / Advanced Settings

The amount of PVS servers depends on so many things, that you can describe this in an article. At least you need two PVS servers for load balancing and fault tolerance. A PVS server can handle a lot of Target Devices; Citrix published an article in which 1500 devices were connected to one PVS server. By default a PVS server can handle 160 concurrent transactions, where Citrix advices to reserve one transaction per active target devices. In other words a PVS server can handle 160 Target Devices by default. Happily this amount can easily be extended by adding additional ports and/or increase the amount of threads per port. Most implementations are only extending the thread per port. This setting is available in Advanced Settings component of each PVS server. In the advanced settings screen several other settings can be configured. The unofficial Citrix advice is that nothing needs to be changed when you have 300 till 500 devices per PVS server. I do not totally agree on this statement, because some settings should be changed in each environment and some settings really depend on your infrastructure. As I already published an article earlier about these advanced settings, please check that article for all details.

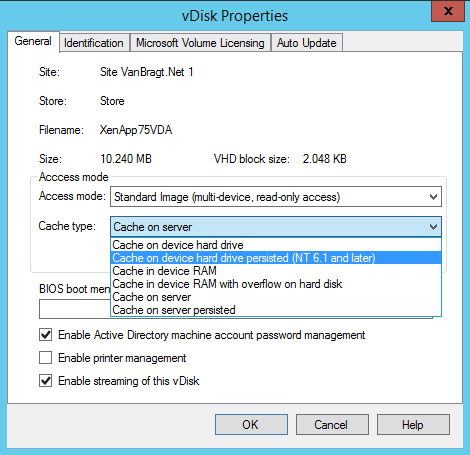

vDisk

A vDisk has two modes: private image and standard image. In a private image the disk has a one to one direction with a target device. In this status changes are retained after restarting the target device. This status is normally only used to create a vDisk, which will run in the standard mode. A vDisk configured in standard mode can be connected to multiple Target Devices and after a restart the Target Device is again in his original vDisk state (changes are not retained). Normally every PVS infrastructure will have the vDisk in standard image mode to facilitate the XenApp or XenDesktop Published Desktop/Application for the end-user. When creating a vDisk the VHD type need to be set. Options available are Fixed or Dynamic. In the past Fixes was recommended as the expectation was that the performance was better than a Dynamic disk. However Citrix investigated this and there is no performance difference between a Fixed and Dynamic vDisk. To save on disk space I recommend creating the vDisk as a dynamic VHD type. When creating a dynamic VHD type you can set the block size with 2 or 16 MB. I normally use 16 MB as most changes are bigger than 2 MB.

Persistent Disk

Although PVS can function without a disk connected to the Target Devices in many cases a disk is still connected to the Target Device. This can be done for storing large (amounts) of file(s) like the App-V cache or a Page File. A second reason for a persistent disk can be to save information, which will be lost normally if it’s on the vDisk. You can think of event logs and other application logfiles. The last reason can be when the write cache will be stored on the device hard drive, but more info on that in the next topic.

Write Cache

The data which are streamed to the Target Device logically need to be stored somewhere. Within PVS this is called the Write Cache. PVS offers several options for the write cache:

-Cache on device hard drive

Till PVS 7.1 the most used type of write cache. The write cache is stored on a hard disk connected to the Target Device. Logically the earlier discussed persistent disk should be available. It’s the most used as it’s probably the easiest one to implement and has the least risks (although I don’t agree on the risk reason). You also should take IOPS into account and determine if your storage can handle the load.

-Cache on device hard drive persisted

The same concept as Cache on device hard driver, except that the data in the write cache is not cleared at a reboot. In other words changes are retained. I never seen this option used in production environment, so I cannot share a lot of information about this option. Also this type has a known bug with ASLR.

-Cache in device RAM

When using Cache in device RAM you add additional memory in which the write cache is stored. So you need both memory for the normal operation and memory for the write cache. Storing data in RAM is one of the best performing options available. Many people think in Cache in device RAM is a bit dangerous as you need to define the amount of memory that may be used for the write cache. If this amount is fully used the Target Device will blue screen. But actually it’s just about sizing, if the device hard drive is too small the same thing will happen. But this discussion is not that relevant anymore as in PVS 7.1 a new write cache type is introduced.

-Cache in device RAM with overflow on hard disk

This option is introduced in PVS 7.1 primarily to solve the ASLR bug with the Cache on device hard drive write cache type. However many people also liked this one as you can use write cache in RAM, while there is no issue anymore when the RAM is full. However personally I think the disclosure that this type was written different, causing a much better performance than all other write cache types is the biggest advance. Because of this performance gain I advise every organization to use this write cache type, independent if you will use memory or disk primarily.

-Cache on server

This write cache type stores the write cache back on the PVS server. This can be done for a small PoC to see/determine the PVS possibilities, but is not suitable for production environments. The data is travelling twice on the network; first it’s streamed from the PVS server to the Target Device, which stores the data back on the PVS server again.

-Cache on server persistent

The same story goes for the Cache on server persistent as the normal Cache on server. Except that changes are retained when the Target Device is restarted.

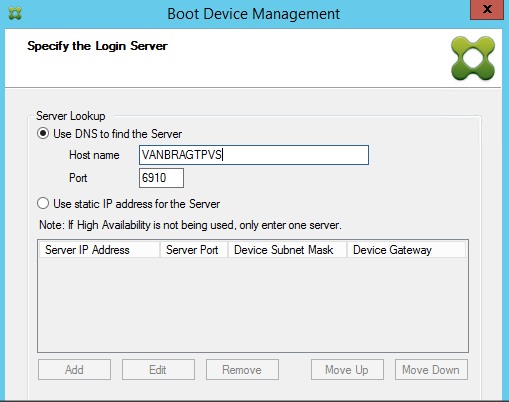

Client Boot

Logically the Target Device need to get in contact with (one of ) the PVS server(s). This can be done via two methodologies: PXE or BDM.

PXE is a well-known protocol to boot-up machines and connect to a (deployment) system. After the PXE process, TFTP will be used for the bootstrap file. The difficulty with PXE and TFTP is not it’s not easy to make it fault tolerant especially with DHCP options and IP helpers are required. However when using a specific VLAN where both the PVS server as the Target Devices are located, it’s pretty easy as PXE will try a broadcast first. If there are more PVS servers available in that VLAN one of them will responds first. Citrix calls this DHCP Proxy. Logically in other scenarios there are possibilities to set-up a load balanced/fault tolerant PXE and TFTP infrastructure. Nick Rintalan wrote a good article about these possibilities in this article.

The second method is using a Boot Device Manager (BDM). This is a start-up ISO/disk that is connected to the Target Device containing all the information to set-up a connection with the PVS infrastructure. Also for BDM you need to find a way to make it high available, especially when using the ISO file within hypervisor infrastructures. In PVS 7.x a BDM can also be created together with the XenDesktop 7.x VDA, but this is again a one to one connection. So if the server information changes you need to change all the specific VMs. But BDM is less dependent off the network infrastructure, which can be a big advantage. Also BDM can connect based on DNS, so more than the default 4 PVS servers can be provided to the Target Devices.

There is no good advice which on the methods is best, it really depends per case.

Summarization

In the article series Citrix Provisioning Services Design I’m did a walkthrough of the most important PVS components and which considerations should be made when designing/setting-up a PVS infrastructure. In part 1 I discussed a PVS Farm, Site, Device Collection, View, Store, Database and Load Balancing This second and last part describes topics like Delegation of Control, Logging/Auditing, Scalability/Advanced Settings, vDisk, Persistent Disk, Write Cache and Client boot providing hopefully a good overview of consideration which should be done designing and building a PVS infrastructure.